The $19,000/Minute PostgreSQL Out-Of-Memory Disaster (and How to Prevent It)

I watched a $1B product crash due to the hidden math of work_mem in PostgreSQL default settings. Learn how to identify volatile memory spikes and prevent the OOM killer disaster.

It wasn’t a malicious attack or a bad deploy. It was a textbook Out-Of-Memory (OOM) error.

The scary part: entire production system went down for 10 minutes, costing approximately $190,000 in lost revenue.

Here’s the terrifying truth about that 10-minute downtime:

PostgreSQL didn’t shut down gracefully; it crashed.

The 10 minutes was just the time it took to finish crash recovery (WAL replay).

They were only saved from hours of downtime by a robust High Availability (HA) setup.

But the real time bomb was still ticking…

Why did the OOM happen? It could crash again at any moment.

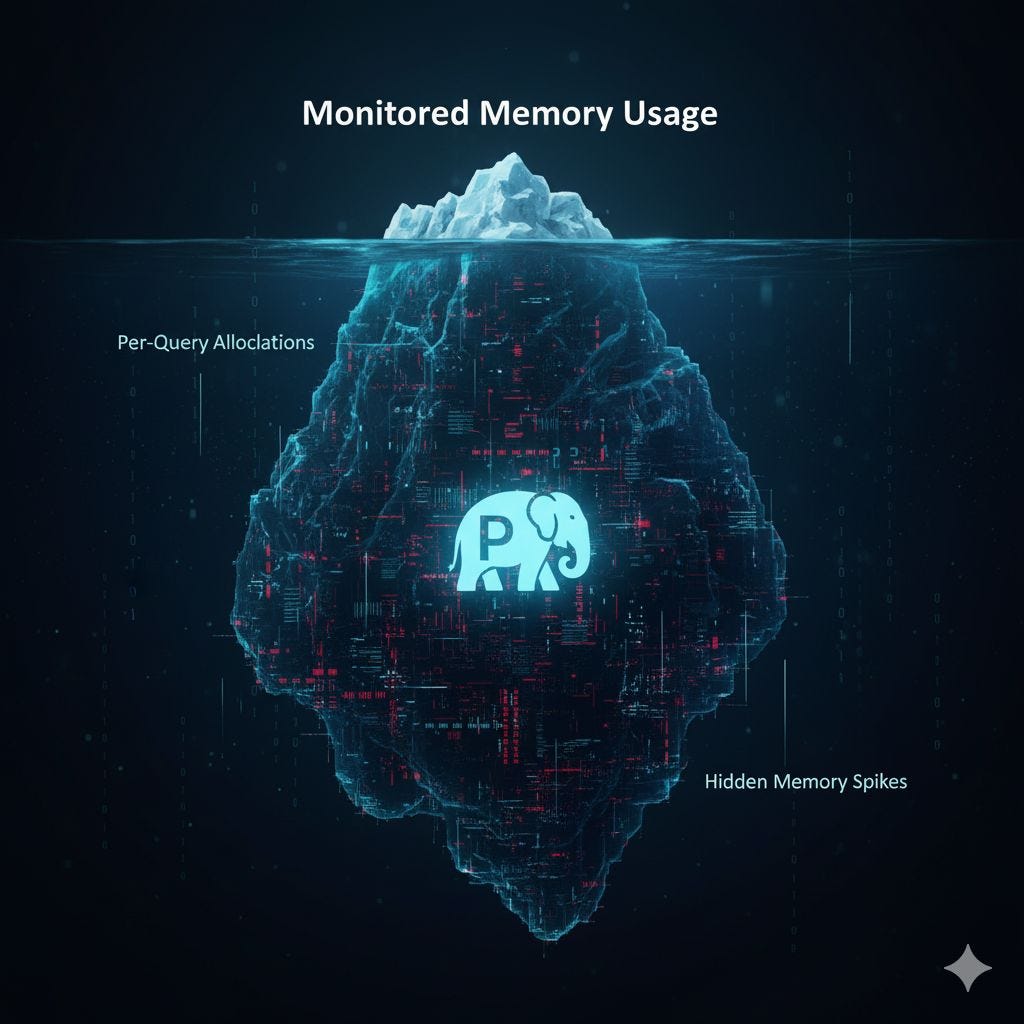

This is the truth your memory monitoring dashboard is hiding from you.

Trap: Default Settings

When we checked server metrics, the overall memory usage looked fine. This is the ultimate memory monitoring trap: Your average usage dashboard is dangerously misleading you.

The culprit was relying on these four PostgreSQL default settings:

shared_buffers: Reserved block cache shared across all connections.maintenance_work_mem: Used for VACUUM,CREATE INDEX, etc.max_connections: Max number of concurrent connections.work_mem: Per sort/hash operation, per query. (THE TIME BOMB)

The combination of these parameters is volatile, but the setting that caused the catastrophic OOM killer event was almost certainly work_mem.

The hidden Math of work_mem: Why Your RAM Disappears

PostgreSQL memory use isn’t just global; it’s also per session/per query.

This is the critical detail missed by many database engineers relying on default configurations: A single connection can run a complex query that uses multiple sort and hash operations in parallel.

This means the actual potential memory consumption is calculated by:

Actual Work Memory≥work_mem×(# of concurrent sorts/joins across all active sessions)

If you leave the default work_mem at 4MB, it seems small. But if you have max_connections set to 500, running a handful of complex reporting queries simultaneously can trigger dozens of concurrent memory-intensive operations.

Suddenly, those small, per-operation allocations aggregate into massive, temporary memory blocks that can instantly push the system past its physical RAM limit. BOOM. OOM Killer strikes.

In this $190,000 disaster, the system went from appearing healthy to shutdown in seconds because of this silent, spiking pattern.

Written after 950+ real-world incidents including 70% postgresql sev1 incident cases with Fortune 100 companies under intense C-level pressure, this is the mindset guide for every DBA, SRE, and Engineering Leader.

This isn’t about fancy tools or dashboards. It’s about how you think, act, and lead when the clock starts ticking and everyone’s watching.

It teaches the Detect → Contain → Communicate → Fix → Prevent habit loop so you stop guessing under pressure. Also you will get 25+ practical queries.

🚀 Discover the new PostgreSQL Health Report one SQL file to diagnose Sev-1 incidents in minutes and fix them fast!

How to Prevent the PostgreSQL OOM Disaster

To stop this spike pattern and prevent the OOM killer from ruining your day, you must stop trusting average usage graphs and start understanding the per-query/per-process memory model.

Here is the tuning lesson learned:

1. Identify the Hungry Queries

We used monitoring tools (Azure PostgreSQL offers Query Store) to identify a handful of specific reporting queries that were manipulating over 100 GB of memory at peak usage. These were the queries running huge ORDER BY or JOIN operations that were causing the disk-writing (temp files) or, worse, the full in-memory allocation.

2. Tune work_mem at the Session Level

Instead of raising work_mem globally (which creates the potential for total memory exhaustion), use the SET work_mem command before the hungry queries are executed.

For 99% of your application queries, keep

work_memlow (e.g., 4MB).

For known, resource-intensive reporting queries, raise it specifically for that session, execute the query, and then reset it. This gives the query the room it needs without risking the whole cluster.

3. Balance the Four Parameters

A perfect solution requires carefully adjusting the four volatile memory parameters in postgresql.conf with recommended Action::

- shared_buffers: Reserved block cache shared across all connections > I recommend customers this to set to 25% of your total system RAM.

- maintenance_work_mem: Used for VACUUM, CREATE INDEX, etc. > Set high for maintenance (e.g., 1GB - 2GB), but understand it’s only used by a few processes at a time.

- max_connections: Max number of concurrent connections > Keep this value as low as possible. Use a connection pooler (like PgBouncer) to multiplex connections and reduce PostgreSQL’s overhead.

- work_mem: Per sort/hash operation, per query. (THE TIME BOMB) > Keep the global value low (e.g., 64MB-128MB) and tune the specific heavy queries using the session-level SET command.

1000+ incidents.

70% Sev1.

Fortune 100 pressure.

One mindset that works every time and 15+ practical queries.

Also you will get 25+ practical queries.

The Lesson

The $19,000 per minute mistake was not a technical failure of hardware; it was a conceptual failure to understand PostgreSQL’s concurrent memory model.

You cannot manage what you do not understand. Stop trusting the average usage graphs and start understanding the per-query/per-process memory model. Prevent your next disaster by proactively tuning your default settings.

Want the Deep Stuff? Become a Paid Member

Paid members get instant access to:

Premium content with Deep dive into full RCA analysis, get actionable scripts, and Ask Me Anything via Email for Mentorship.