What I Capture During a PostgreSQL Sev‑1 So My RCA Isn’t Guesswork

Learn why PostgreSQL root cause analysis often fails after Sev-1 incidents, how evidence disappears, and how DBAs should approach incident RCA correctly.

Table of Contents

The PostgreSQL Sev-1 Pattern Everyone Recognizes

Why PostgreSQL Root Cause Analysis Often Feels Unsatisfying

RCA Structure

The Real Problem: PostgreSQL Evidence Disappears After Recovery

The Shift That Fixes PostgreSQL RCA: Start During the Sev-1

What Evidence Matters During a PostgreSQL Sev-1 Incident

Redefining Root Cause in PostgreSQL Incident Analysis

Weak vs. Strong PostgreSQL RCA: (Examples)

What to Do Before the Next PostgreSQL Sev-1

Practical Next Step (and the fastest way to stop high load RCAs)

FAQ

The PostgreSQL Sev-1 Pattern Everyone Recognizes

If you manage PostgreSQL in production long enough, you’ve lived this exact database incident response scenario:

PostgreSQL latency increases

Application errors appear

Database monitoring alerts fire

Traffic backs up

A Sev-1 incident is declared

The PostgreSQL database is stabilized

Then, after recovery, leadership asks:

What was the root cause of this PostgreSQL outage?

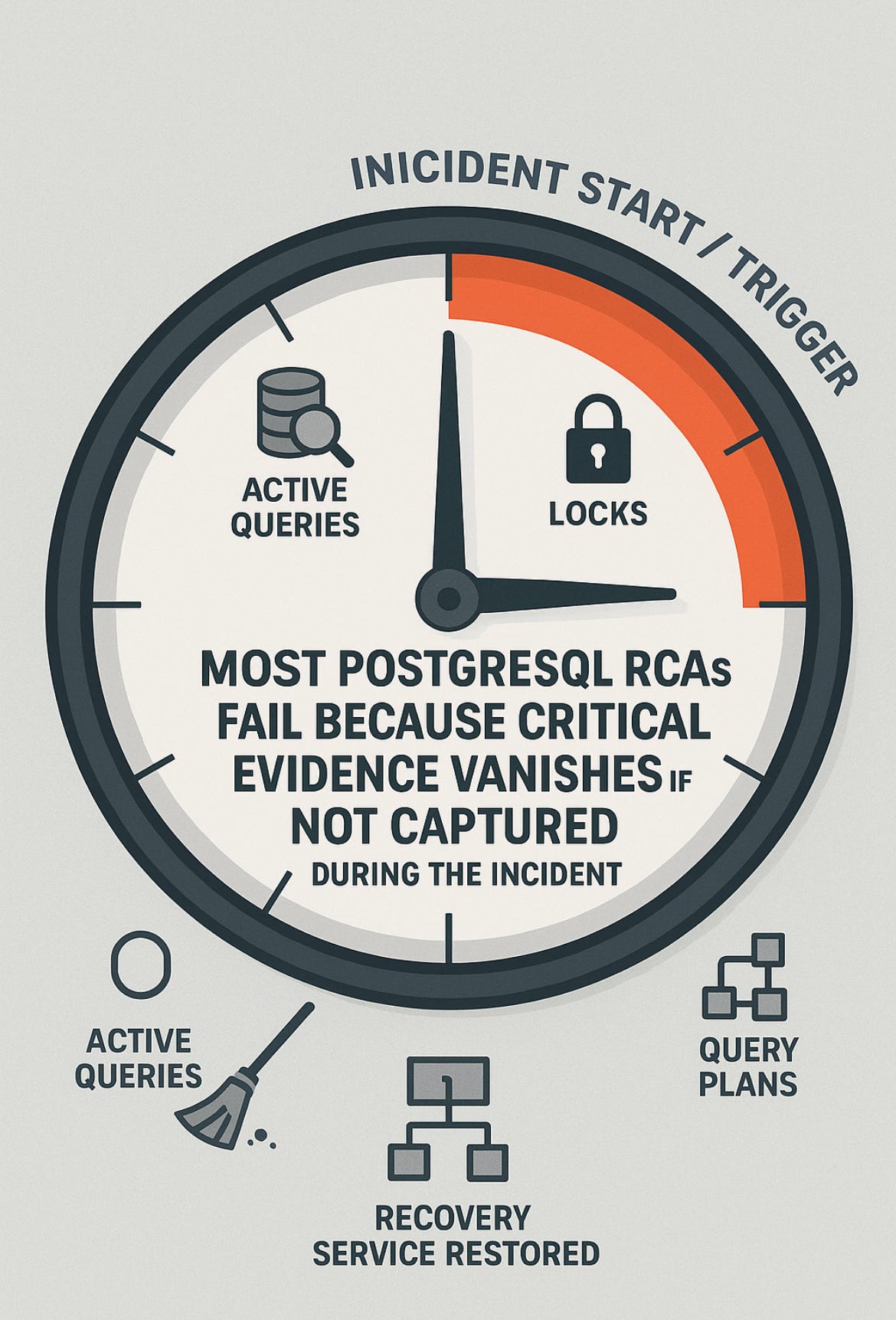

This is where many PostgreSQL Root Cause Analysis (RCA) efforts begin and quietly fail. Not because the DBA team lacks skill, but because database RCA often starts after the evidence has already disappeared.

Why PostgreSQL Root Cause Analysis Often Feels Unsatisfying

Common conclusions look like this:

High load caused slow queries

Lock contention blocked transactions

Autovacuum caused performance issues

A missing index was identified

These are symptoms. They describe what PostgreSQL was doing, not why a Sev-1 became inevitable.

Effective database incident root cause analysis must explain timing, amplification, and inevitability not just symptoms.

RCA Structure

1. Requirement

What problem are we analyzing?

Clearly define the scope and impact of the incident.

2. What Good Looks Like

What should have happened?

Describe expected behavior, SLAs, and success criteria.

3. Timing

Why did this happen now?

Link the incident to a trigger such as a change, growth threshold, deployment, or traffic shift.

4. Amplification

What caused the issue to snowball?

Identify factors like pool saturation, retries, lock chains, or queueing collapse.

5. Inevitability

What made the failure unavoidable?

The Real Problem: PostgreSQL Evidence Disappears After Recovery

PostgreSQL is highly adaptive. After recovery:

blocking ends

lock queues clear

pg_stat_activitylooks normalquery patterns stabilize

autovacuum catches up

logs rotate

By the time a formal Sev-1 RCA happens, teams rely on memory and graphs so the postmortem becomes guesswork.

This is why many database postmortems feel vague, defensive, or inconclusive.

The Shift That Fixes PostgreSQL RCA: Start During the Sev-1

A critical mindset shift for DBAs:

PostgreSQL root cause analysis starts during incident response not after recovery.

The primary goal during a Sev-1 database outage is restoring service.

The secondary goal equally important is preserving RCA evidence.

You do not need every metric.

You need the evidence that explains cause, amplification, and failure of controls.

What Evidence Matters During a PostgreSQL Sev-1 Incident

1. Database Activity (PostgreSQL Workload Analysis)

Capturing active queries, execution time, and application names helps answer:

Which queries dominated PostgreSQL CPU or IO?

Was this normal workload or an abnormal spike?

Which application or service triggered database pressure?

Without this data, PostgreSQL RCA defaults to:

The database experienced high load

Which provides no actionable insight.

2. Lock Contention and Blocking Analysis in PostgreSQL

Lock-related PostgreSQL outages are especially difficult to analyze post-incident because lock contention disappears after recovery.

Preserved lock data answers:

Which sessions were blocked?

What PostgreSQL lock types were involved?

Why did the application workload fail to tolerate locking?

This transforms RCA language from:

PostgreSQL was blocked

to

A DDL operation acquired an AccessExclusiveLock during peak traffic, blocking critical transactions.

3. Query Performance and Plan Regression Analysis

Many PostgreSQL Sev-1 incidents are triggered by query plan regressions or sudden changes in query execution behavior.

Evidence answers:

Did query execution counts spike?

Did a query plan change due to statistics or data growth?

Did a previously safe query become dominant?

This elevates RCA from:

A slow query caused the issue

to

Data growth and stale statistics caused a query plan regression that amplified load.

4. Autovacuum, Bloat, and Transaction Debt Analysis

Autovacuum frequently appears in PostgreSQL outage postmortems often incorrectly blamed as the cause.

Preserved vacuum and bloat indicators help determine:

Whether autovacuum was reacting to accumulated technical debt

Whether long-running transactions blocked cleanup

Whether table growth outpaced maintenance capacity

This reframes the RCA from:

Autovacuum caused the PostgreSQL outage

to

Transaction patterns and bloat forced autovacuum into emergency behavior.

Redefining Root Cause in PostgreSQL Incident Analysis

In PostgreSQL performance troubleshooting:

High CPU usage is not a root cause

Lock waits are not a root cause

Autovacuum activity is not a root cause

Root cause is:

The earliest preventable decision that made the PostgreSQL incident inevitable.

This could be:

migrations shipped without guardrails (

lock_timeout, safe rollout)unbounded batch workloads

no statement timeout

missing visibility into long-running transactions

stats maintenance not keeping up with data growth

Weak vs. Strong PostgreSQL RCA: (Examples)

Example 1: High load

Weak RCA (symptom):

High load caused latency.

Strong RCA (root cause + timing + amplification):

A new batch job launched at 02:14 ran 200 parallel queries, saturated the connection pool, and retries amplified load until service failure.

Example 2: Lock contention

Weak RCA (symptom):

Lock contention blocked transactions.

Strong RCA (root cause + timing + amplification):

A migration acquired an AccessExclusiveLock during peak traffic; blocked requests queued, exhausted the pool, and the application’s retry policy multiplied traffic.

Example 3: Autovacuum

Weak RCA (symptom):

Autovacuum caused performance issues.

Strong RCA (root cause + timing + amplification):

A 72-hour transaction blocked vacuum, bloat accumulated, and emergency maintenance collided with peak workload.

Example 4: Slow query

Weak RCA (symptom):

A slow query was identified.

Strong RCA (root cause + timing + amplification):

Data growth plus stale statistics triggered a plan regression (index scan → sequential scan), making one query dominant and amplifying I/O.

What to Do Before the Next PostgreSQL Sev-1

Decide the evidence you always capture (workload, locks, query stats, vacuum debt, timeline).

Automate snapshots.

Treat evidence as incident-critical data, not nice to have.

Because the worst outcome isn’t downtime it’s recovering without knowing why it happened.

Practical Next Step (and the fastest way to stop high load RCAs)

If you want this to be repeatable at 2AM, package it into a single command.

That’s exactly what your PostgreSQL Health Report Function does: it captures a broad incident snapshot (connections, locks, wait events, query hotspots, vacuum/bloat, index health, replication, checkpoints) and returns prioritized remediation commands.

If you want one command to capture RCA evidence before it disappears, run it the moment a Sev-1 is declared:

SELECT * FROM public.run_health_report();

SELECT * FROM public.health_critical;

SELECT * FROM public.health_score;

SELECT * FROM public.health_alerts;Price: $29 – Includes 2 Files: 👉 [Buy the Updated PostgreSQL Health Report]

FAQ

What is PostgreSQL root cause analysis?

PostgreSQL RCA identifies the earliest preventable decision that made a Sev-1 inevitable, explaining timing and amplification not just symptoms.

Why do PostgreSQL RCAs often fail?

They start after recovery, when locks clear, sessions end, stats normalize, and logs may rotate so evidence is missing.

Is autovacuum usually the root cause of PostgreSQL outages?

No. Autovacuum is rarely the root cause. It usually reacts to deeper issues like transaction debt, table bloat, or long-running transactions.

What evidence should DBAs collect during a Sev-1 PostgreSQL incident?

Active queries and execution times

Lock contention and blocking chains

Query execution frequency and plan behavior

Vacuum, bloat, and transaction age signals

Every change you make right down with timeline

How can DBAs prevent repeated PostgreSQL Sev-1 incidents?

Capture RCA evidence during incidents

Identify amplification patterns (locks, regressions, bloat)

Add guardrails instead of only alerts

Write RCAs that drive architectural improvements

If you’ve handled a PostgreSQL Sev-1 and struggled to explain the real cause, this publication is for you.

Follow for practical insights, postmortems, and frameworks to make your next RCA meaningful and actionable.

The framing of RCA as starting during the incident, not after, is powerful. Most teams ive worked with treat evidence collection as optional during firefighting, then spend days reconstructing what happend. The distinction between symptoms and root cause is key tho - saying autovacuum caused the outage vs recognizing vacuum got blocked by a long txn. The examples showing weak vs strong RCAs nail exactly what separates productive postmortems from ones that just document pain without preventing recurrence.